College is a numbers game.

SAT scores. Extracurriculars. APs.

College is a numbers game.

It’s a statement that almost every high school student hears at least once. It’s tempting to boil the college application process down to a science. According to nonprofit group EDUCAUSE, over 75% of colleges already have. These colleges use algorithms to manage the herd of potential students, taking a comprehensive look at a student’s portfolio and running incredibly complex systems to see exactly how they stack up against the competition. It is, to many, the future of education. There’s just one issue.

The game’s broken.

In attempting to take bias out of the application process, colleges have instead hidden it behind walls of proprietary info, math, and contractors, replacing flawed people with flawed programs. While these models are incredibly efficient at doing what they do, that’s exactly the problem. A faster decision is not a fairer one.

The history of acceptance algorithms — and their biases — begins in 1979. Forced to go through thousands of applications, a doctor at the St. George’s Hospital Medical School in London wrote code to automate the grueling and often inconsistent process. By 1982, this algorithm, which looked at both prospective and previous students to make its recommendations, was universally used by the college.

It wasn’t until a few years later that people started to catch on. As tech journal the IEEE Spectrum reports, in attempting to mimic human behavior the algorithm had codified bias. A commission found that having a non-European name could take up to 15 points off an applicant, and female applicants were docked an average of three points based solely on their gender. The commission also found that many staff viewed the algorithm as absolute and unquestionable. If a student was denied? Well, they just should have had better numbers.

While the school was eventually found guilty of discrimination in admissions, the growth of algorithms was too big to stop. According to a recent Pew Research Center study, from 2002 to 2017, the amount of high school undergraduate applications more than doubled. In addition, the Center on Budget and Policy Priorities found that overall state funding for public universities fell over $6.6 billion from 2008 to 2018. As a result of these logistical challenges, algorithms have largely replaced humans as the initial test for applications.

“You want to be able to select students who have demonstrated in high school that they have the ability to be successful on your campus, and that that varies from college to college,” Dr. Gordon Chavis, the Associate Vice President for Enrollment Services at UCF said.

Chavis helps manage over 60,000 applications and the over $600 million in financial aid the school gives out each year.

“We tend to use phrases like, ‘Do we have the resources? And do we have the programming to support a student as she he or she attempts to become successful and graduate from university?’” he said.

Most of the time, this selection involves predictive modeling. In an effort to wade through the pile of applications, the vast majority of schools outsource this job to consulting companies. These companies compile data and recommendations, researching things like academic reports or intended majors.

However, this data can sometimes go … further. As Slate Magazine found earlier this year, companies often use browsing histories, student profiles, an opaque “affinity index” and — of particular interest to many schools — ethnic identity and household income. This data, most of the time collected without knowledge or consent, is used by colleges for the stated goal of figuring out if a student is likely to attend, and then comparing increases in offered scholarships to increased chance of enrollment.

This process is nothing new. However, in recent years colleges have turned to algorithms for class optimization. By running thousands of models, algorithms are able to figure out “how to invest the minimum amount of aid necessary” and still get proper class sizes. At least, that’s how Othot, an analytics company serving 30 colleges, advertises it.

To colleges, this is just numbers. But for students, these models only worsen existing problems. Think tank New America reports that the financial need met by colleges has fallen steadily since 2001, and a study by UCLA found that while a $1,000 increase in aid increases a student’s chance to graduate by 1%, a $1,000 decrease lowers that chance by over 5%. Enrollment algorithms consider how to make a student enroll, but not how to make them succeed. With students backed into a corner by the student debt crisis and lowering acceptance rates, algorithms have become the perfect predator.

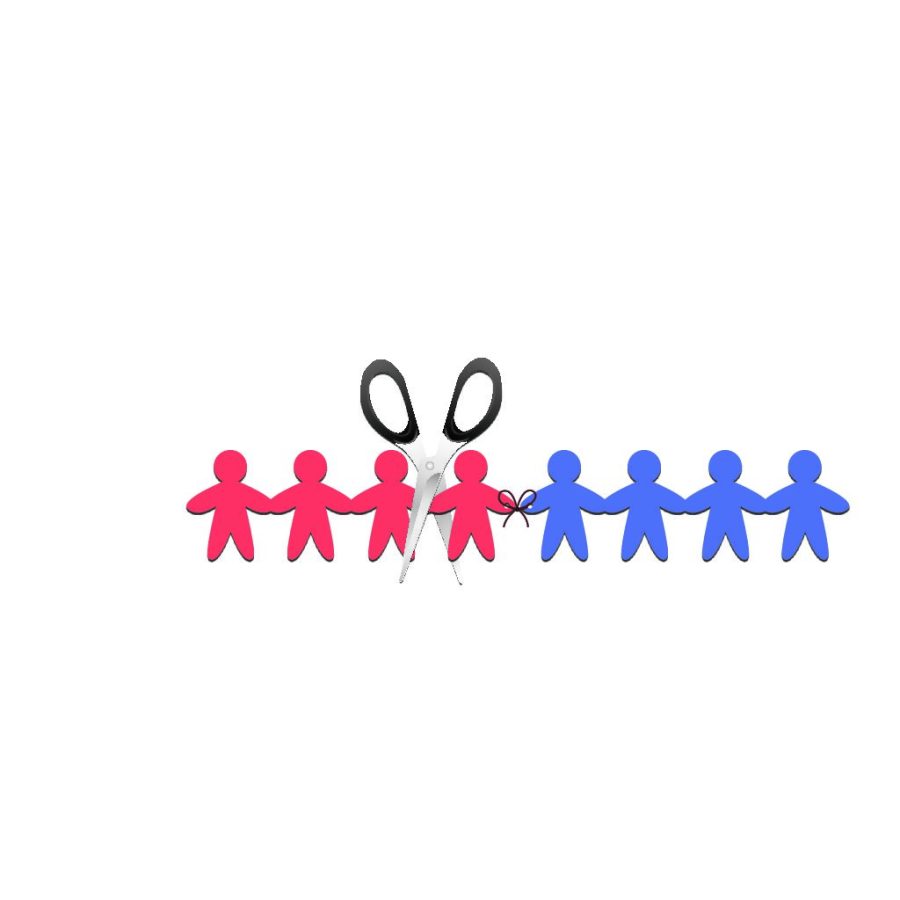

Not every student is being affected equally though. As Brookings Institution points out, if an algorithm decides that Black applicants would require too much aid, it will simply cut them out of the class. Colleges already leave students out— as investigative journalism group The Hechinger Report found earlier this year, 15 state flagship universities had at least a 10-point gap between the percent of high school graduating Black and Latino students and students who enrolled that fall. When combined with these models, these discrepancies can create a self-fueling cycle, as algorithms look at past decisions to build failed judgements off failed judgements.

The problems don’t stop when students get into college either. Analytics group EAB has licensed its predictive model Navigate to over 500 schools across the nation. However, in a study by tech newspaper The Markup that led to universities like Texas A&M dropping the program, public records showed that the EAB algorithm labeled Black and Latino students as “high-risk” much more often than white students, even if all other factors were identical. For example, at the University of Massachusetts Amherst Black men were 3.9 times more likely to be flagged than white men. This type of data can push students out of more difficult courses and influence advisors, all because of a student’s skin color.

Discrimination in education is nothing new. But now, it’s a science. Allowing colleges to reject poor or minority students simply because it’s not the most profitable choice is a betrayal of the standards of education, not to mention a violation of civil rights law.

Colleges can start making changes by removing class optimization through scholarships. How much aid a student gets should be based on their merit and necessity, not a magic number that has been designed specifically to entrap them. Colleges should also focus on removing race and other factors from algorithmic consideration of a student, as has been the case in Florida since 2008.

However, the most important thing that colleges — and governments — must do is demand more transparency. The vast majority of colleges buy algorithms from vendors rather than developing in house. Under the claim of proprietary information these vendors obscure and hide their models, and colleges are more than happy to not ask questions as long as they make a nice profit. Pressure on these companies, both to disclose their models and to perform regular bias audits, could clear concerns about how these models are being used.

“Any student, whether they are an ethnic minority, or don’t have the ability to go to college or to afford college, as long as the processes are equitable and fair are going to get a fair shot in terms of support to attend college,” Chavis said. “I’ve had a career for about 22 years and in the business, I don’t know of an institution who isn’t willing to support students.”

Algorithms will never be completely fair, but ignoring that fact is only going to make them worse.

Turns out, college is a numbers game. And when the house wins, everyone loses.